After the Deadline – Open Sourced!

After the Deadline, an intelligent checker for spelling and grammar, is now free software. The server software is available under the GNU General Public License.

We’ve created a portal at http://open.afterthedeadline.com where you can:

- Download the GPL source code, models, and rule set powering After the Deadline

- Learn how to add rules and find your way around the code

- Get data from Wikipedia and Project Gutenberg to regenerate models if you need to

We’re also announcing a jQuery API for After the Deadline. Now you can add an AtD check to a DIV or TEXTAREA with little effort. This is the same API powering our Intense Debate plugin.

Our goal with this project is to help people write better, every where. Now all the tools are available. We look forward to seeing what you do with it.

After the Deadline is an Automattic project. Automattic contributes to WordPress.org and operates WordPress.com, the Akismet anti-spam service, the PollDaddy survey tool, Gravatar, and the Intense Debate distributed comment system.

How to write a great blog comment (hint: spell check!)

I pay so much attention to what I write in my blog posts, trying to make the content balanced, and get the presentation correct. People judge me on my ability (or lack of) to write well. Comments also matter and today I found a post where one of my favorite writers, Grammar Girl, addresses this topic:

Quickly, here are Grammar Girl’s rules to write a great comment:

- Rule #1 — Determine Your Motivation — why are you writing the comment?

- Rule #2 — Provide Context — state what you’re responding to in the post or comments

- Rule #3 — Be Respectful — others may read what you write in the future, say only what you’d say to their face

- Rule #4 — Make a Point — “me too” posts are a waste of time, have substance

- Rule #5 — Know What You’re Talking About — speak with some authority or cite something useful

- Rule #6 — Make One Point per Comment — keep it focused and on track

- Rule #7 — Keep it Short — brevity is your friend, it’s a comment not the post

- Rule #8 – Link Carefully — you don’t want to come off as a spammer, people will call you on it

- Rule #9 — Proofread — people judge you on how you write!

And finally, my reason for writing this post in the first place:

Grammar Girl mentions readers should use a browser with a spellchecker to help avoid the troll who jumps in with: “What do you know about chocolate storage, you can’t even spell ‘their.'” Of course no browser spell checker that I know of will help you if you misuse there or their. Another option is to expect users to copy and paste into a Word Processor or PolishMyWriting.com. I do this sometimes, but it’s time consuming.

If you run a blog and want to help your readers, you can install the Intense Debate comment system and enable the After the Deadline plugin for it. Then your readers will have access to a hyper-accurate spell checker, misused word detection, and grammar checking. I don’t have proof of it, but who knows, maybe well written comments will help raise the level of conversation on your site. Let me know!

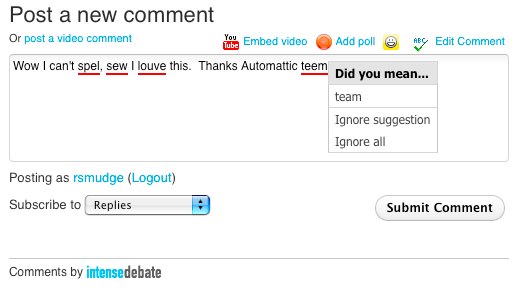

Spell Check Comments with Intense Debate and AtD

I’ve heard several requests to make spell and grammar checking available in blog comments. Today we’ve taken the first step towards that with an After the Deadline plugin for Intense Debate — the premier distributed comment system for websites and blogs.

Installation is a matter of visiting their plugin page and clicking activate. Nothing else to it. You don’t even need an AtD API key. AtD/ID checks spelling, misused words, and some grammar issues.

If you want to see AtD/ID in action, visit the Intense Debate blog where they’re covering the story too.

http://blog.intensedebate.com/2009/10/08/after-the-deadline-intensedebate-plugin/

The AtD/ID plugin works with TypePad, Blogger, WordPress, and wherever you choose to install the ID widget. I’ll consider this effort successful when someone publicly asks “why does my comment field have better writing correction than my publishing platform?” <G>

P.S. source code for the plugin is available and I’ll be posting an AtD/jQuery API soon. Keep an eye out for that.

AtD Updates – Split Words and Possessive Errors

I’m back from my vacation. Ok, I’ve been back for over a week now. I’m working on some stuff that will make it easier to add AtD to more applications. In the mean time, from your suggestions I’ve added some new rules to AtD. Besides the usual tweaking here is what you get:

The spell checker now splits the misspelled word and rates these split phrases with the other suggestions. This means typing alot, you’ll now see a lot as the top suggestion. It also means you’ll see the right thing when you accidentally run two words together such as atleast -> at least. I was notified about the latter error on Twitter.

Our local Media Engineer, Raanan, noted that AtD didn’t find an error in the phrase “if this is your companies way of doing support”. If you’re curious, here is the what the process looks like:

<raanan> raffi: tried “I wonder if this is your companies way of providing support”

<raanan> AtD didn’t flag it

<raffi> I can add some grammar rules for your followed by a plural noun and try to correct it to a possessive noun

<raffi> your .*/NNS::word=your \1:base’s::pivots=\1,\1:base’s

<raffi> whether that catches some real errors or not is up for debate, can try it though

<raanan> could be interesting

<raffi> I’m trying it now

<raffi> rule development is taking an error like you gave me, going through some steps to make a rule for it, and testing it on a bunch of text I have laying around

<raffi> if it finds errors, it gets included–if it doesn’t… it goes nowhere

<raffi> or gets tweaked

<raffi> if it flags too many things as correct, I keep trying to refine it (can usually be done) until it just catches errors (although it might miss some errors to keep from flagging correctly written stuff)

<raffi> when I first made the grammar checker, I spent 3 weeks straight doing that, it got very tedious

<raffi> $ ./bin/testr.sh test.r raanan.txt

<raffi> Warning: Dictionary loaded: 124314 words at dictionary.sl:50

<raffi> Warning: Looking at: your|companies|way = 3.8133735008675426E-5 at testr.sl:24

<raffi> Warning: Looking at: your|company’s|way = 2.955364463172345E-4 at testr.sl:24

<raffi> I wonder if this is your companies way of providing support

<raffi> I/PRP wonder/VBP if/IN this/DT is/VBZ your/PRP$ companies/NNS way/NN of/IN providing/VBG support/NN

<raffi> 0) [ACCEPT] is, your companies -> @(‘your company’s’)

<raffi> id => c17ed0984ed4d01ac172f0afd95ee00c

<raffi> pivots => \1,\1:possessive

<raffi> path => @(‘your’, ‘.*’) @(‘.*’, ‘NNS’)

<raffi> word => your \1:possessive

<raffi> ding

<raffi> let’s see if that works against a bunch of written text

And it did. I tested a broader version of the rule against a big corpus of written text and found several errors with only one false positive. Here are the final rules:

That|The|the|that|Your|your|My|my|Their|their|Her|her|His|his .*/NNS .*/NN::word= \1:possessive \2::pivots=\1,\1:possessive

If you have ideas to enhance After the Deadline or found a clear cut error that it doesn’t catch, let me know. I’m happy to look at it.

Comments Off on AtD Updates – Split Words and Possessive Errors

Statistical Grammar Correction or Not…

One thing that makes AtD so powerful is using the right tool for the right job. AtD uses a rule-based approach to find some errors and a statistical approach to find others. The misused word detection feature is the heaviest user of the statistical approach.

Last week I made great progress on the misused word detection making it look at the two words (trigrams) before the phrase in question instead of just one (bigrams). There were several memory challenges to get past to make this work in production, but it happened and I was happy with the results.

Before this, I just looked at the one word before and after the current word. All well and good but for certain kinds of words (too/to, their/there, your/you’re) this proved useless as it almost always guessed wrong. For these words I moved to a rule-based approach. Rules are great because they work and they’re easy to make. The disadvantage of rules is a lot of them are required to get broad coverage of the errors people really make. The statistical approach is great because it can pick up more cases.

The Experiment

So with the advent of the trigrams I decided to revisit the statistical technology for detecting errors I use rules for. I focused on three types of errors just to see what kind of difference there was. These types were:

- Wrong verb tense (irregular verbs only) – Writers tend to have trouble with irregular verbs as each case has to be memorized. Native English speakers aren’t too bad but those just learning have a lot of trouble with these. An example set would be throw vs. threw vs. thrown vs. throws vs. throwing.

- Agreement errors (irregular nouns only) – Irregular nouns are nouns that have plural and singular cases one has to memorize. You can’t just add an s to convert them. An example is die vs. dice.

- Confused words – And finally there are several confused words that the statistical approach just didn’t do much good for. These include it’s/its, their/there, and where/were.

You’ll notice that each of these types of errors relies on fixed words with a fixed set of alternatives. Perfect for use with the misused word detection technology.

My next step was to generate datasets for training and evaluating a neural network for each of these errors. With this data I then trained the neural network and compared the test results of this neural network (Problem AI) to how the misused word detector (Misused AI) did as-is against these types of errors. The results surprised me.

The Results

| Problem AI | Misused AI | |||

|---|---|---|---|---|

| Precision | Recall | Precision | Recall | |

| Irregular Verbs | 86.28% | 86.43% | 84.74% | 84.68% |

| Irregular Nouns | 93.91% | 94.36% | 93.77% | 94.65% |

| Confused Words | 85.38% | 83.64% | 83.85% | 81.90% |

Table 1. Statistical Grammar Checking with Trigrams

First, you’ll notice the results aren’t that great. Imagine what they were like before I had trigrams. 🙂 Actually, I ran those numbers too. Here are the results:

| Problem AI | Misused AI | |||

|---|---|---|---|---|

| Precision | Recall | Precision | Recall | |

| Irregular Verbs | 69.02% | 68.76% | 64.84% | 64.43% |

| Irregular Nouns | 83.66% | 83.66% | 82.93% | 82.93% |

| Confused Words | 80.15% | 77.82% | 76.15% | 73.27% |

Table 2. Statistical Grammar Checking with Bigrams

So, my instinct was at least validated. There was a big improvement going from bigrams to trigrams… just not big enough. What surprised me from these results is how close the AI trained for detecting the misused words performed to the AI trained for the problem at hand.

So one this experiment showed there is no need to train a separate model for dealing with these other classes of errors. There is a slight accuracy difference but it’s not significant enough to justify a separate model.

Real World Results

The experiment shows that all I need (in theory) is a higher bias to protect against false positives. With higher biasing in place (my target is always <0.05% false positives), I decided to run this feature against real writing to see what kind of errors it finds. This is an important step because the tests come from fabricated data. If the mechanism fails to find meaningful errors in a real corpus of writing then it’s not worth adding to AtD.

The irregular verb rules found 500 “errors” in my writing corpus. Some of the finds were good and wouldn’t be picked up by rules, for example:

Romanian justice had no saying whatsoever

[ACCEPT] no, saying -> @('say')

However it will mean that once 6th May 2010 comes around the thumping that Labour get will be historic

[ACCEPT] Labour, get -> @('getting', 'gets', 'got', 'gotten')

Unfortunately, most of the errors are noise. The confused words and nouns have similar stories.

Conclusions

The misused word detection is my favorite feature in AtD. I like it because it catches a lot of errors with little effort on my part. I was hoping to get the same win catching verb tenses and other errors. This experiment showed misused word detection is fine for picking a better fit from a set and I don’t need to train separate models for different types of errors. This gives me another tool to use when creating rules and also gives me a flexible tool to use when trying to mine errors from raw text.

Top Ignored Phrases on WordPress.com

One advantage of working with WordPress.com is I have access to how you use After the Deadline and what you’re doing with it. The “Ignore Always” preferences are stored in our databases. I decided to see what I could learn by querying this information.

Here are the top ignored phrases:

- alot

- Kanye

- lol

- texting

- everytime

- Beyonce

- cafe

- youtube

- haha

- dough

- f***

- Steelers

- chemo

It’s kind of funny because this tells me something about what you’re writing about. Here are my responses to these.

2, 3, 4, 6, 9, 12, 13, and 14 are legitimate things you’re writing about and belong in the AtD dictionary. I added them to the master wordlists yesterday.

1 and 5 are errors but you like them, I’ll keep flagging them. 🙂 I do realize AtD needs to do a better job suggesting split words in the spelling corrections. It’s a day project and I already know how I plan to do this. Expect this soon.

8 and 11 are proper nouns and the correct capitalization exists inside of AtD. In fact AtD probably suggests the correct capitalization when marking these words as wrong. For example, at Automattic we consider WordPress the only proper spelling of WordPress. AtD honors this. Still, despite this, I noticed some folks have chosen to select ignore so WordPress does not come up as a misspelled word.

7 is in the AtD dictionary but with the accented character. I wonder if a plugin exists to automatically insert accents in words where they belong. Using AtD’s contextual model I could probably do this in a non-intrusive way with a high degree of accuracy. Any interest? In the meantime, I’m going to add words like 7 without the accent to the AtD lexicon.

12 is in the dictionary and isn’t checked by the misused word detector. I hypothesize that several writers feared their self-expression would be hindered and added it manually from their user settings page.

The word dough (10) is in the dictionary. My guess is the misused word detection loves to flag the word dough as incorrect despite the best intentions of our baking bloggers. This is precisely the kind of insight I wanted to gain from this little experiment.

Where I’d like to see AtD go…

I often get emails from folks asking for support for different platforms. I love to help folks and I’m very interested in solving a problem. I don’t have the expertise in all the platforms folks want AtD to support. Since it’s my occupation, I plan to keep improving AtD as a service, but here is my wish list of places where I’d like to see AtD wind up:

Wikipedia

I’d like to meet Jimmy Wales, one of the founders of Wikipedia. First because I love Wikipedia and it tickles me pink that so much knowledge is available at my finger tips. I’m from the last generation to grow up with hard bound encyclopedias in my home.

Second, because I’d love to explore how After the Deadline could help Wikipedia. AtD could help raise the quality of writing there.

Since the service will be open source there won’t be an IP cost necessarily. The only barriers are AtD support in the MediaWiki software and server side costs. Fortunately I’ve learned a lot about scaling AtD from working with WordPress.com and given a number of edits/hour and server specs, I could come up with a good guess about how much horsepower is really needed.

I’d love to write the MediaWiki plugin myself but unfortunately I’m so caught up trying to improve the core AtD product that this is beyond my own scope. If anyone chooses to pick this project up, let me know, I’ll help in any way I can.

Online Office Suites and Content Management Systems

There are a lot of people cutting and pasting from Word to their content management systems. There are many web applications either taking over the word processor completely or for niche tasks. For this shift to really happen these providers need to offer proofreading tools that match what the user would get in their word processor. None of us are supposed to depend on automated tools but a lot of us do.

Abiword, KWord, OpenOffice, and Scribus

It’s a tough sell to say a technology like AtD belongs in a desktop word processor. I say this because AtD consumes boatloads of memory. I could adopt it to keep limited amounts of data in memory and swap necessary stuff from the disk. If there isn’t a form of AtD suitable for plugging into these applications, I hope someone clones the project and adapts it to these projects. If someone chooses to port AtD to C, let me know, I’ll probably give a little on my own time and will gladly answer questions.

Attempting to Detect and Correct Out of Place Plurals and Singulars

Welcome to After the Deadline Sing-A-Long. I’m constantly trying different experiments to catch my (and occasionally your) writing errors. When AtD is open sourced, you can play along at home. Read on to learn more.

Today, I’m starting a new series on this blog where I will show off experiments I’m conducting with AtD and sharing the code and ideas I use to make them happen. I call this After the Deadline Sing-A-Long. My hope is when I finally tar up the sourcecode and make AtD available, you can replicate some of these experiments, try your own ideas, have a discovery, and send them to me.

Detecting and Correcting Plural or Singular Words

One of the mistakes I make most is to accidentally add an ‘s’ to a word making it plural when I really wanted it to be singular. My idea? Why not take every plural verb and noun and convert them to their singular form and see if the singular form is a statistically better fit.

AtD can do this. The grammar and style checker are rule-based but they also use the statistical language model to filter out false positives. To set up this experiment, I created a rule file with:

.*/NNS::word=:singular::pivots=,:singular .*/VBZ::word=:singular::pivots=,:singular

AtD rules each exist on their own line and consist of declarations separated by two colons. The first declaration is a pattern that represents the phrase this rule should match. It can consist of one or more [word pattern]/[tag pattern] sequences. The tag pattern is the part-of-speech (think noun, verb, etc.). After the pattern comes the named declarations. The word= declaration is what I’m suggesting in place of the matched phrase. Here I’m converting any plural noun or verb to a singular form. The pivots specify the parts of the phrase that have changed to inform that statistical filtering I mentioned earlier.

The next step is to create a file with some examples to test with. I generally do this to see if the rule does what I want it to do. Here are two of the sentences I tried:

There are several people I never had a chance to thanks publicly. After the Deadline is a tool to finds errors and correct them.

So, with these rules in place, here is what happened when I tested them:

atd@build:~/atd$ ./bin/testr.sh plural_to_singular.rules examples.txt

Warning: Dictionary loaded: 124264 words at dictionary.sl:50

Warning: Looking at: several|people|I = 0.003616585140061973 at testr.sl:24

Warning: Looking at: several|person|I = 1.1955063236931511E-4 at testr.sl:24

Warning: Looking at: to|thanks|publicly = 1.25339251574261E-6 at testr.sl:24

Warning: Looking at: to|thank|publicly = 1.7004358463574743E-4 at testr.sl:24

There are several people I never had a chance to thanks publicly.

There/EX are/VBP several/JJ people/NNS I/PRP never/RB had/VBD a/DT chance/NN to/TO thanks/NNS publicly/RB

0) [REJECT] several, people -> I

id => 3095c361e8beeb60abebed29fe5657be

pivots => ,:singular

path => @('.*', 'NNS')

word => :singular

1) [ACCEPT] to, thanks -> @('thank')

id => 3095c361e8beeb60abebed29fe5657be

pivots => ,:singular

path => @('.*', 'NNS')

word => :singular

Warning: Looking at: Deadline|is|a = 0.05030783620533642 at testr.sl:24

Warning: Looking at: Deadline|be|a = 0.00804979134152438 at testr.sl:24

Warning: Looking at: to|finds|errors = 0.0 at testr.sl:24

Warning: Looking at: to|find|errors = 0.0024240611254462076 at testr.sl:24

Warning: Looking at: finds|errors|and = 2.5553084536790455E-5 at testr.sl:24

Warning: Looking at: finds|error|and = 3.14416280096839E-4 at testr.sl:24

After the Deadline is a tool to finds errors and correct them.

After/IN the/DT Deadline/NN is/VBZ a/DT tool/NN to/TO finds/NNS errors/NNS and/CC correct/NN them/PRP

0) [REJECT] Deadline, is -> a

id => 1ab5cd35b6146cbecbc31c8b2a6d8e96

pivots => ,:singular

path => @('.*', 'VBZ')

word => :singular

1) [ACCEPT] to, finds -> @('find')

id => 3095c361e8beeb60abebed29fe5657be

pivots => ,:singular

path => @('.*', 'NNS')

word => :singular

2) [ACCEPT] finds, errors -> @('error')

id => 3095c361e8beeb60abebed29fe5657be

pivots => ,:singular

path => @('.*', 'NNS')

word => :singular

And that was that. After I ran the experiment against a more substantial amount of text, I found too many phrases were flagged incorrectly and I didn’t find many legitimate errors of this type. If I don’t see many obvious mistakes when applying a rule against several books, blogs, and online discussions–I ignore the rule.

In this case, the experiment showed this type of rule fails. There are options to make it better:

- Set a directive to raise the statistical threshold

- Try looking at more context (two words out, instead of one)

- Look at the output and try to create rules that look for more specific situations where this error occurs

Tweaking the Misused Word Detector

I’ve been hard at work improving the statistical misused word detection in After the Deadline. Nothing in the world of NLP is ever perfect. If computers understood English we’d have bigger problems like rapidly evolving robots that try to enslave us. I’m no longer interested in making robots to enslave people, that’s why I left my old job.

AtD’s statistical misused word detection relies on a database of confusion sets. A confusion set is a group of words that are likely to be mistaken for each other. An example is cite, sight, and site. I tend to type right when I mean write, but only when I’m really tired.

AtD keeps track of about 1,500 of these likely to be confused words. When it encounters one in your writing, it checks if any of the other words from the confusion set are a statistically better fit. If one of the words is a better fit then your old word is marked as an error and you’re presented with the options.

AtD’s misused word detection uses the immediate left and right context of the word to decide which word is the better fit. I have access to this information using the bigram language model I assembled from public domain books, Wikipedia, and many of your blog entries. Here is how well the statistical misused word detection fares with this information:

| Precision | Recall | |

|---|---|---|

| Gutenberg | 93.11% | 92.37% |

| Wikipedia | 93.64% | 93.04% |

Table 1. AtD Misused Word Detection Using Bigrams

Of course, I’ve been working to improve this. Today I got my memory usage under control and I can now afford to use trigrams or sequences of three words. With a trigram I can use the previous two words to try and predict the third word. Here is how the statistical misused word detection fares with this extra information:

| Precision | Recall | |

|---|---|---|

| Gutenberg | 97.30% | 96.75% |

| Wikipedia | 97.12% | 96.56% |

Table 2. AtD Misused Word Detection Using Bigrams and Trigrams

AtD uses neural networks to decide how to weight all the information fed to it. These tables represent the before and after of introducing trigrams. As you can see the use of trigrams significantly boosts precision (how often AtD is right when it marks a word as wrong) and recall (how often AtD marks a word as wrong when it is wrong).

Lies…

If you think these numbers are impressive (and they’re pretty good), you should know a few things. The separation between the training data and testing data isn’t very good. I generated datasets for training and testing, but both datasets were drawn from text used in the AtD corpus. More clearly put–these numbers assume 100% coverage of all use cases in the English language because all the training and test cases have trigrams and bigrams associated with them. If I were writing an academic paper, I’d take care to make this separation better. Since I’m benchmarking a feature and how new information affects it, I’m sticking with what I’ve got.

This feature is pretty good, and I’m gonna let you see it, BUT…

I have some work to do yet. In production I bias against false positives which hurts the recall of the system. I do this because you’re more likely to use the correct word and I don’t want AtD flagging your words unless it’s sure the word really is wrong.

The biasing of the bigram model put the statistical misused word detection at a recall of 65-70%. I expect to have 85-90% when I’m done experimenting with the trigrams. My target false positive rate is under 0.5% or 1:200 correctly used words identified as wrong.

With any luck this updated feature will be pushed into production tomorrow.

Python Bindings for AtD

Miguel Ventura has been kind enough to contribute Python bindings for After the Deadline. You can get them at:

Here is how you use them:

import ATD

ATD.setDefaultKey("your API key")

errors = ATD.checkDocument("Looking too the water. Fixing your writing typoss.")

for error in errors:

print "%s error for: %s **%s**" % (error.type, error.precontext, error.string)

print "some suggestions: %s" % (", ".join(error.suggestions),)

Expected output:

grammar error for: Looking **too the** some suggestions: to the spelling error for: writing **typoss** some suggestions: typos

These bindings are available under an MIT license. If you’d like to contribute AtD bindings for another programming language or program visit our AtD Developer Resources page to learn more about the XML API.

Comments Off on Python Bindings for AtD

28 comments